Ostrichinator

This project is maintained by coxlab

Are Deep Learning Algorithms Easily Hackable?

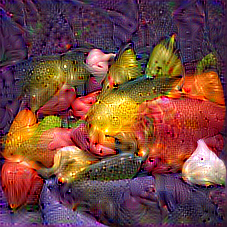

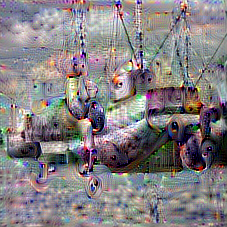

While deep learning algorithms are successfully pushing the boundaries of computer vision by breaking the records of ImageNet Challenges (ILSVRC) year after year recently [1-6], researchers also started to notice and had reported that images with imperceptible distortions [7,9] or being artificially generated and completely unrecognizable [8], can in many cases easily fool deep learning algorithms and cause them to report high confidences on utterly wrong classes.In this project, we built a web demo in which users can explore such kind of intriguing phenomenon simultaneously on multiple powerful deep learning networks [1-3] given any images and object classes, and by answering the questionnaire, potentially help us better characterize deep learning algorithms and the way they agree or disagree with human vision. Our algorithm is improved from methods proposed in [7,10] and implemented based on MatConvNet [11] and minConf [12], which should be generally efficient. However, due to the limitation of hardware resources, the demo site is running in CPU mode and isn't able to serve too many concurrent hacking requests. Thus, we do encourage users to use their own machines with our freely downloadable source code, where GPU mode is fully supported too, if frequent requests are to be made. Setting up mirror sites for this web demo would be very much appreciated, and the information can be shared through the wiki page. Do try out the web demo before reading the following results and see if you think deep learning algorithms are easily fooled too.

Click here to continue reading.

Citing Ostrichinator

An arXiv paper describing the algorithmic details about this project will be released soon. You can also cite this project as follows.

@misc{ostrichinator,

author = {C.-Y. Tsai and D. Cox},

title = {Are Deep Learning Algorithms Easily Hackable?},

howpublished = {\url{http://coxlab.github.io/ostrichinator}},

year = 2015

}

References

[1] Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the ACM International Conference on Multimedia, pages 675-678. ACM, 2014.[2] K. Chatfield, K. Simonyan, A. Vedaldi, and A. Zisserman. Return of the devil in the details: Delving deep into convolutional nets. CoRR, abs/1405.3531, 2014.

[3] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. CoRR, abs/1409.1556, 2014.

[4] A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pages 1097-1105, 2012.

[5] P. Sermanet, D. Eigen, X. Zhang, M. Mathieu, R. Fergus, and Y. LeCun. OverFeat: Integrated recognition, localization and detection using convolutional networks. CoRR, abs/1312.6229, 2013.

[6] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich. Going deeper with convolutions. CoRR, abs/1409.4842, 2014.

[7] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. J. Goodfellow, and R. Fergus. Intriguing properties of neural networks. CoRR, abs/1312.6199, 2013.

[8] A. Nguyen, J. Yosinski, and J. Clune. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. CoRR, abs/1412.1897, 2014.

[9] I. J. Goodfellow, J. Shlens, and C. Szegedy. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572, 2014.

[10] K. Simonyan, A. Vedaldi, and A. Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. CoRR, abs/1312.6034, 2013.

[11] A. Vedaldi and K. Lenc. MatConvNet – convolutional neural networks for MATLAB. CoRR, abs/1412.4564, 2014.

[12] M. W. Schmidt, E. Berg, M. P. Friedlander, and K. P. Murphy. Optimizing costly functions with simple constraints: A limited-memory projected quasi-newton algorithm. In International Conference on Artificial Intelligence and Statistics, 2009.

[13] G. E. Hinton, O. Vinyals, and J. Dean. Distilling the knowledge in a neural network. In NIPS 2014 Deep Learning Workshop, 2014.

[14] W. J. Scheirer, L. P. Jain, and T. E. Boult. Probability models for open set recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), 36, November 2014.

[15] J. Long, N. Zhang, and T. Darrell. Do convnets learn correspondence? CoRR, abs/1411.1091, 2014.

[16] A. Mahendran and A. Vedaldi. Understanding deep image representations by inverting them. CoRR, abs/1412.0035, 2014.

[17] A. S. Razavian, H. Azizpour, A. Maki, J. Sullivan, C. H. Ek, and S. Carlsson. Persistent evidence of local image properties in generic convnets. CoRR, abs/1411.6509, 2014.

[18] S. Gu and L. Rigazio. Towards deep neural network architectures robust to adversarial examples. CoRR, abs/1412.5068, 2014.

[19] D. L. Yamins, H. Hong, C. Cadieu, and J. J. DiCarlo. Hierarchical modular optimization of convolutional networks achieves representations similar to macaque IT and human ventral stream. In Advances in Neural Information Processing Systems, pages 3093-3101, 2013.

[20] E. Vig, M. Dorr, and D. Cox. Large-scale optimization of hierarchical features for saliency prediction in natural images. In Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on, pages 2798-2805, June 2014.